Our latest episode features Nicholas Holland (SVP of Product & AI at HubSpot) and explains how AI is actually changing go-to-market teams:

AI cuts rep research time and turns calls into structured insight

“AI Engine Optimization” (AEO) is becoming the new SEO

This conversation isn’t speculative—it’s a blueprint.

Listen to Episode 42 on Apple Podcasts

🚨 Upcoming Workshop: Sept 18 — AI Product Strategy for Realists

Use promocode pod30 at checkout to get 30% off your registration!

Join us for a live 90-minute workshop that goes beyond the hype. We’ll walk through real frameworks, raw mistakes, and how to make AI product strategy actually work—for small teams, scale-ups, and enterprise leaders.

AI Product Strategy FAQ, Minus the Bullsh*t

Over the past few months, we’ve been collecting the most common—and most misunderstood—questions about AI product strategy. What we found were recurring patterns of confusion, hype, and hope. This article breaks down those questions one by one with honest answers, uncomfortable truths, and hard-won lessons from teams actually building and shipping AI products.

Each section includes:

A blunt reality check (“Uncomfortable Truth”)

A strategic lens for tackling it

A sticky insight to anchor your messaging

A practical takeaway

This is not a “how AI works” explainer. This is how to make it useful—inside a real product.

Q1: How do we choose the right use case for AI in our product that actually delivers value?

Uncomfortable Truth: The best use cases might be internal—not flashy or customer-facing. If you’re just “adding AI” for the optics, you’re already off-track.

Strategic Frame: Don’t chase the cool feature—hunt down the messiest workflow and blow it up.

Always Remember: Your AI should solve a problem your users complain about—not a problem your team finds interesting.

Research This: Map the top 10 recurring tasks inside your product (or across your internal ops). Which of them have the highest time cost and lowest user satisfaction? That’s your AI opportunity space.

Real Example: Altan (natural language app builder); internal fraud detection automation; AI for helpdesk triage.

Takeaway: Pick the ugliest, least scalable problem your users hack around with spreadsheets. Then automate that.

Q4: How do we handle data privacy and ethics when integrating AI features?

Uncomfortable Truth: Most tools don’t offer true privacy—they use your data to train their models. That’s not a technical flaw—it’s a business choice.

Strategic Frame: If trust is central to your brand, bake it into the infrastructure. Build sandboxes. Offer guarantees. Publish your governance.

Always Remember: You don’t get to ask users for their data and their forgiveness.

Research This: Ask your legal, compliance, or procurement partners what requirements would be non-negotiable for adopting a third-party AI tool. Then apply those to your own product.

Example Guidance: Make “zero training from user data” a tiered feature—or your default.

Takeaway: If you’re targeting enterprise buyers, your AI feature won’t get through procurement unless you have strict privacy toggles and a clear usage log.

Q5: How do we measure the success of AI features in a product?

Uncomfortable Truth: More engagement doesn’t always mean more value. In AI, time spent might mean confusion—or masked frustration. People may feel delight and friction in the same moment, and without qualitative research, you won’t know which signal you’re shipping.

Strategic Frame: Define one high-value outcome. Build just enough UI to validate whether users reach it.

Always Remember: Don’t just watch what users do—listen for what they expected to happen.

Research This: Run a usability test where you ask users to explain what they expect the AI feature to do before using it—then again after. Once you've delivered an output that surprises them, ask them what outcomes it enables.

Takeaway: In a contract automation tool, the success metric isn’t “time in app”—it’s “first draft accepted with zero edits.” That’s your true win signal.

Q6: What’s the best way to communicate AI capabilities to non-technical stakeholders or users?

Uncomfortable Truth: AI isn’t novel anymore—outcomes are.

Strategic Frame: Sell transformation, not tech. Show how life is better with the tool than without.

Always Remember: Once someone experiences the magic, it doesn’t matter what powers it.

Research This: Ask 5 users to explain your AI feature to a friend, using their own words. Their phrasing will tell you how clearly the value lands—and what metaphors or language they trust.

Examples:

GlucoCopilot: Turns data chaos into peace of mind.

Flo: Makes symptom tracking feel intuitive and empowering.

Lovart: Auto-generates brand kits from a single prompt.

Takeaway: Everyone’s building outputs. You win by delivering outcomes. Spreadsheets are useful to power users—but most people just want the insight and what to do next. AI should skip the formula and deliver the finish line.

Q7: How do we monetize AI in a way users will actually pay for?

Uncomfortable Truth: Most AI products aren’t worth paying for. Saving users time sounds valuable—but it rarely converts.

Strategic Frame: Whatever you actually will charge for your platform, build something so valuable that power users will pay 5x that price.

Always Remember: SaaS platforms priced themselves to charge a recurring price that felt negligible to customers. Your job is to build something they can't live without.

Research This: When researching pricing don't even talk about the product, research the cost of the problem. Find out what they'd be willing to pay for a perfect solution to it.

Takeaway: If you want revenue, don’t promise “efficiency.” Deliver a win they couldn’t achieve on their own—and make that outcome your product.

Q8: How can I find out if my AI product idea is achievable?

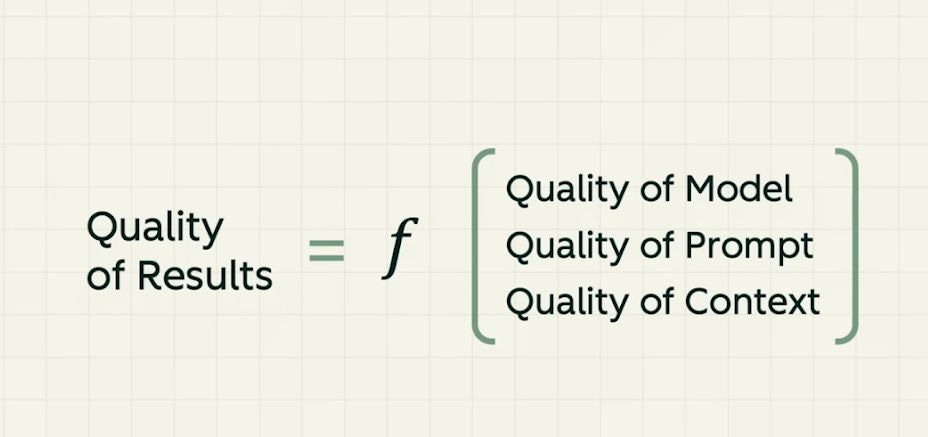

Uncomfortable Truth: Most AI product ideas sound good until you try to build them. The biggest blocker isn’t the model—it’s the missing context, fragmented data, or fuzzy workflows that make it hard to deliver anything reliably.

Strategic Frame: Before you scope the feature, scope the dependency chain. What data, context, and decision logic would an AI need to produce something consistent and useful?

Always Remember: AI models fail to deliver you what you want because you didn't give them enough specifics and context.

Research This: Run a digital ethnography of how and why people use your products and complementary products. Find out the exact inputs and outputs they need to succeed. Determine the exact criteria necessary to deliver a monumental leap forward.

Takeaway: Don’t just validate demand—validate deliverability. If you can’t consistently access the context your AI needs, you’re not ready to ship it.

🔁 Want to go deeper? Use promocode pod30 at checkout to get 30% off your registration.

Join our live Sept 18th workshop where we unpack these strategies with real examples, live critiques, and practical templates. Designed for teams who want more signal, less noise.

Check out the Design of AI podcast

Where we go behind the scenes with product and design leaders from Atlassian, HubSpot, Spotify, IDEO, and more. You’ll hear exactly how they’re building AI-native workflows, designing agentic systems, and transforming their teams.

🎧 Listen on Spotify | 🍎 Listen on Apple | ▶️ Watch on YouTube

Recommended AI Product Strategy Episodes:

42. HubSpot’s Head of AI on How AI Rewrites Customer Acquisition & Marketing

41. Vibe Coding Will Disrupt Product — Base44’s Path to $80M Within 6 Months

40. Secrets to Successful Agents: Atlassian’s Strategy for Success

27. Implementing AI in Creative Teams: Why Adoption Will Be the Hard Part

26. Designing a New Relationship with AI: Critical Product Lessons

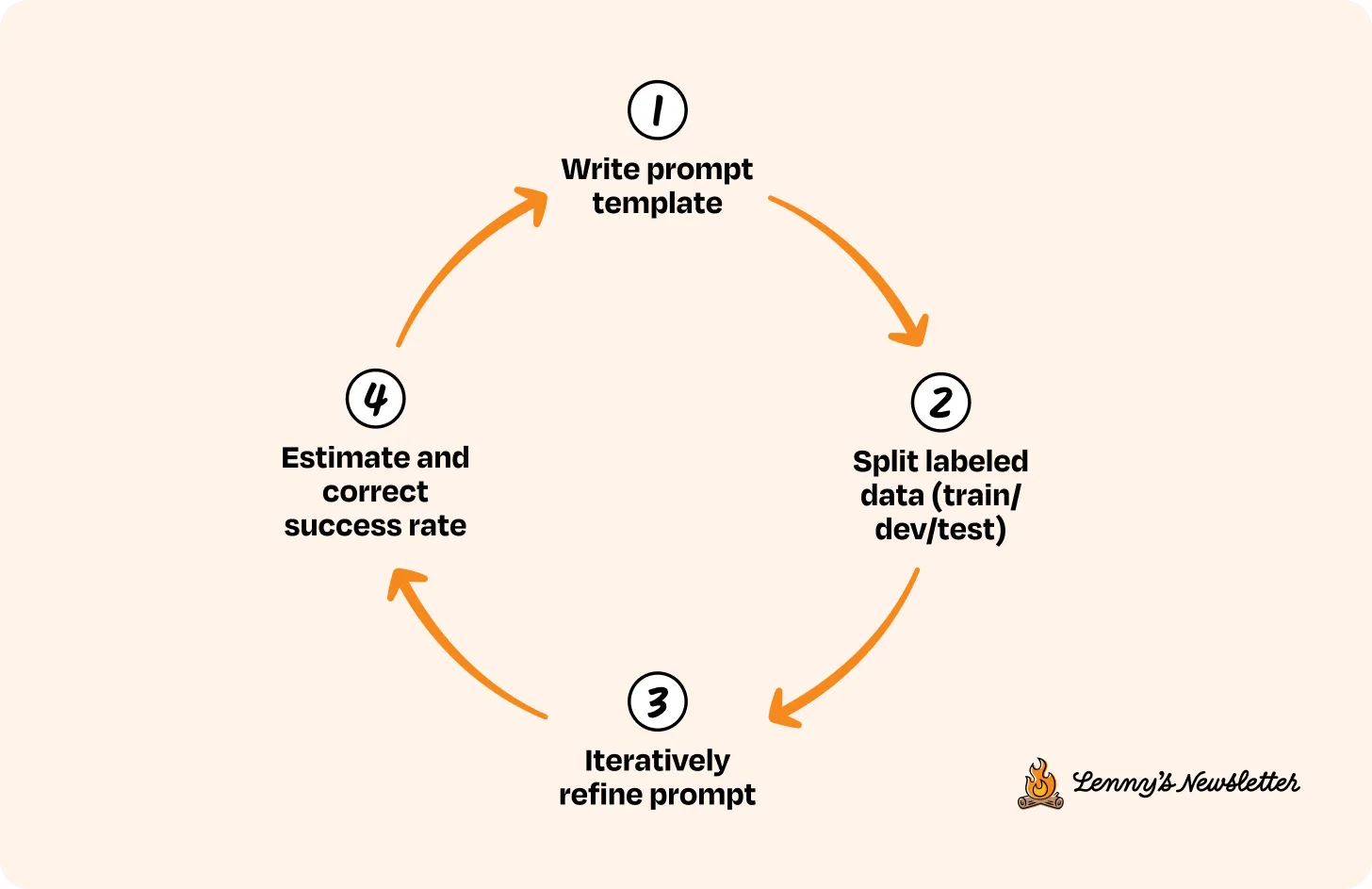

Bonus Insight: How to Build Eval Systems That Actually Improve Products

Great AI products don’t just ship features—they measure whether they actually worked. This piece by Kanjun Qiu offers a no-fluff framework for building evaluation systems that ground teams in outcomes, not opinions. Stop guessing. Start testing what truly improves real-world usage.