Our latest guest is Maya Ackerman — AI‑creativity researcher, professor, and author of Creative Machines: AI, Art & Us (Wiley), as well as founder of WaveAI and LyricStudio (View recent colab with NVidia).

Maya’s perspective is not just insightful — it’s a necessary reality check for anyone building AI today. She challenges the comforting narrative that AI is a neutral tool or a natural evolution of creativity. Instead, she exposes a truth many in tech avoid: AI is being deployed in ways that actively diminish human creativity, and businesses are incentivized to accelerate that trend.

Her research shows how overly aligned, correctness-first models flatten imagination and suppress the divergent thinking that defines human originality. But she also shows what’s possible when AI is designed differently — improvisational systems that spark new directions, expand a creator’s mental palette, and reinforce human authorship rather than absorbing it.

This episode matters because Maya names what the industry refuses to admit. The problem is not “AI getting too powerful,” it’s AI being used to replace instead of elevate. Businesses are applying it as a cost-cutting mechanism, not a creative amplifier. And unless product leaders intervene, the damage to creativity — and to the people who rely on it for their livelihoods — will become irreversible.

Listen to the Episode on Spotify, Apple Podcasts, Youtube

We’re engineering a global creative regression and pretending we aren’t.

Generative AI could radically expand human imagination, but the systems we deploy today overwhelmingly suppress it. The literature is unequivocal:

AI boosts creative output only when tools are intentionally designed for exploration, not correctness.

When aligned toward predictability, AI drives conformity and sameness.

The rise of “AI slop” is not an insult — it’s the logical outcome of misaligned incentives.

New evidence shows that AI-assisted outputs become more similar as more people use the same tools, reducing collective creativity even when individual outputs look “better.”

Homogenization is measurable at scale: marketing, design, and written content generated with AI converge toward the same tone and syntax, lowering engagement and cultural diversity.

Repeated reliance on AI weakens human originality over time — users begin outsourcing ideation, losing confidence and capacity for divergent thought.

Resources:

The Impact of AI on Creativity: https://www.researchgate.net/publication/395275000_The_Impact_of_AI_on_Creativity_Enhancing_Human_Potential_or_Challenging_Creative_Expression

Generative AI and Creativity (Meta-Analysis): https://arxiv.org/pdf/2505.17241

AI Slop Overview: https://en.wikipedia.org/wiki/AI_slop

Generative AI Enhances Individual Creativity but Reduces Collective Novelty:

https://pmc.ncbi.nlm.nih.gov/articles/PMC11244532/Generative AI Homogenizes Marketing Content:

https://papers.ssrn.com/sol3/Delivery.cfm/5367123.pdf?abstractid=5367123Human Creativity in the Age of LLMs (decline in divergent thinking):

https://arxiv.org/abs/2410.03703

BOTTOM LINE: If your product optimizes for correctness, brand safety, and throughput before originality, you are actively contributing to the global collapse of creative quality. AI must be designed to spark—not sanitize—human imagination.

Award-winning creative talent is disappearing at scale, and the trend is accelerating.

The global creative workforce is shrinking faster than at any time in modern history. Companies claim AI is “enhancing creativity,” yet most restructuring reveals the opposite: AI is being deployed primarily to cut labor costs. In general, layoff announcements top 1.1 million this year, the most since 2020 pandemic.

What’s happening now:

Omnicom announced 4,000 job cuts and shut multiple agencies — Reuters reporting: https://www.reuters.com/business/media-telecom/omnicom-cut-4000-jobs-shut-several-agencies-after-ipg-takeover-ft-reports-2025-12-01/

WPP, Publicis, and IPG executed multi-round layoffs across design, writing, strategy, and production.

Digiday interviews confirm AI is used mainly to eliminate junior and mid-level creative roles: https://digiday.com/marketing/confessions-of-an-agency-founder-and-chief-creative-officer-on-ais-threat-to-junior-creatives/

The most important read on the future & destruction of agencies comes from

. She always brings a powerful and necessary mirror to the shitshow that is modern corporate world. Read it here:Freelancers and independent creatives are being hit even harder:

UK survey: 21% of creative freelancers already lost work because of AI; many report sharply lower pay — https://www.museumsassociation.org/museums-journal/news/2025/03/report-finds-creative-freelancers-hit-by-loss-of-work-late-pay-and-rise-of-ai/

Illustrators, motion designers, and concept artists report declining commissions as clients adopt Midjourney-style pipelines.

Voice actors face shrinking bookings due to synthetic voice models.

Stock photography, stock audio, and digital concepting have been heavily cannibalized by tools like Midjourney, Runway, and Suno.

The research into AI shows even deeper risks:

The Rise of Generative AI in Creative Agencies — confirms agencies deploy AI for margin protection rather than creative innovation: https://www.diva-portal.org/smash/get/diva2%3A1976153/FULLTEXT03.pdf

IFOW/Sussex study shows AI exposure correlates with lower job quality and salary stagnation for creatives: https://www.ifow.org/news-articles/marley-bartlett-research-poster---ai-job-quality-and-the-creative-industries

BOTTOM LINE: Creative roles are vanishing because AI is being optimized for efficiency rather than imagination. If we want creative industries to survive, AI must expand human originality — not replace the people who produce it.:** Creative roles are vanishing because AI is being deployed for efficiency rather than imagination. If we want a future with vibrant creative industries, AI must be designed to amplify human originality — not replace it.

Please participate in our year-end survey

We are studying how AI is restructuring careers, skills, and expectations across product, design, engineering, research, and strategy.

Your responses influence:

the direction of Design of AI in 2025,

what questions we investigate through research,

what frameworks we build to help leaders adapt—and protect—their teams.

Take the survey: https://tally.so/r/Y5D2Q5

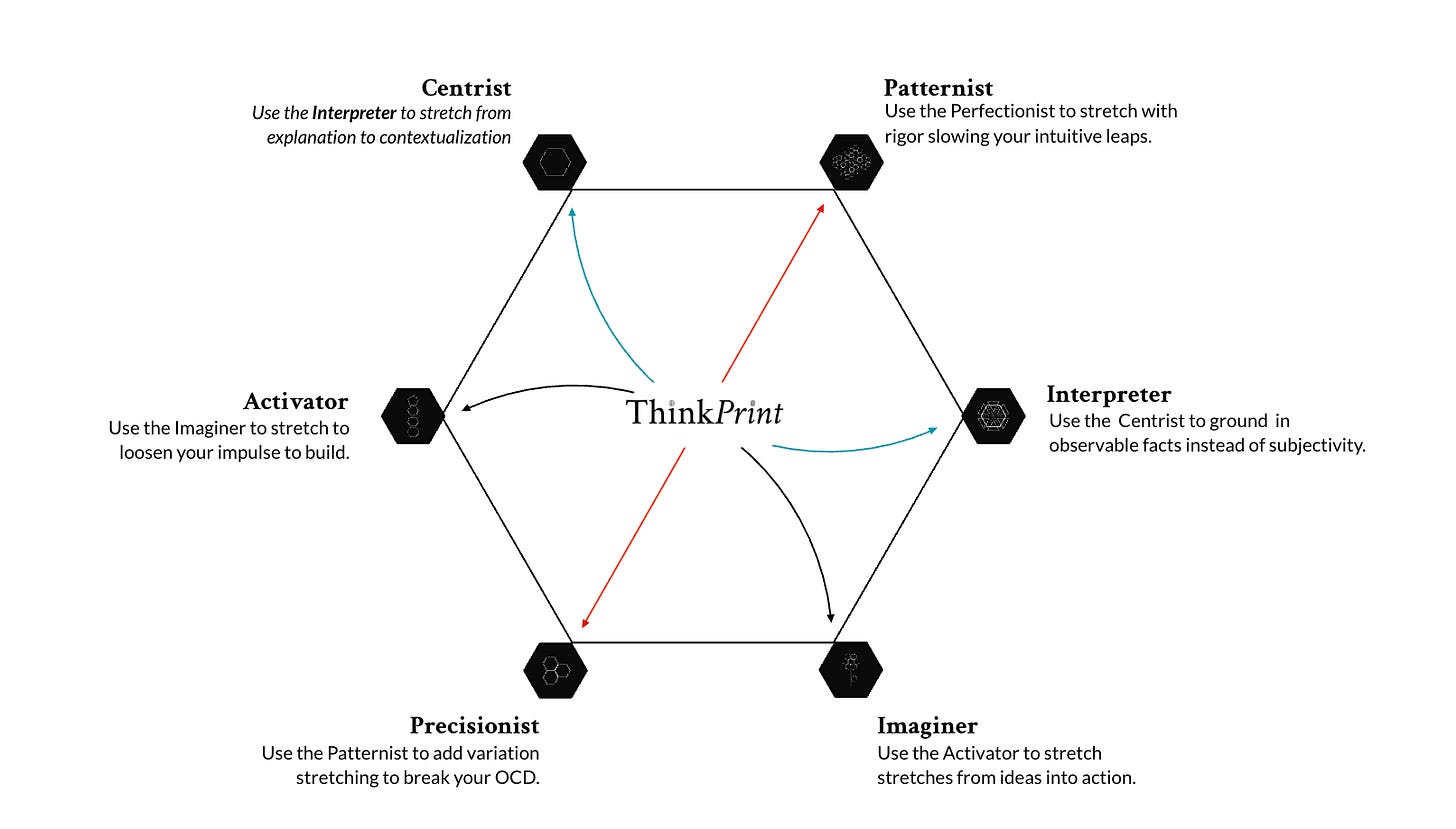

Understand your cognitive style so you know how to best leverage AI to boost you

The Creative AI Academy has developed as an assessment tool to help you understand your creative style. We all tackle problems differently and come up with novel solutions using different methods. Take the ThinkPrint assessment to get a blueprint of how you ideate, judge, refine, and decide. Knowing this will help you know in which ways AI can boost —rather than undermine— your originality.

For me it was powerful to see my thinking style mirrored back at me. It gave structure to what enhances and undermines my creativity, meaning I better understand what role (if any) AI should play in expanding my creative capabilities. Thank you to Angella Tapé for demonstrating this tool and presenting the perfect next evolution of Dr. Ackerman’s lessons about needing AI to be a creative partner, not cannibalizer.

BOTTOM LINE: Without cognitive self-awareness, you’re not “partnering” with AI—you’re surrendering your creative identity to it. Take the ThinkPrint assessment and redesign your workflow around human-led, AI-supported thinking.

We are trading away human intellect for productivity—and the safety evidence is damning.

The research is now impossible to ignore: AI makes us faster, but it makes us worse thinkers.

A major multi-university study (Harvard, MIT, Wharton) found that users with AI assistance worked more quickly but were “more likely to be confidently wrong.”

Source: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4573321

This pattern shows up across cognitive science:

Stanford and DeepMind researchers found that relying on AI “reduced participants’ memory for the material and their ability to reconstruct reasoning steps.”

Source: https://arxiv.org/abs/2402.01832EPFL showed that routine LLM use “led to measurable declines in writing ability and originality over time.”

Source: https://arxiv.org/abs/2401.00612University of Toronto researchers warn that repeated LLM use “narrows human originality, shifting users from creators to evaluators of machine output.”

Source: https://arxiv.org/abs/2410.03703

In other words: we are outsourcing the exact cognitive muscles that make human thinking valuable — creativity, reasoning, comprehension — and replacing them with pattern-matching convenience.

And while we weaken ourselves, the companies building the systems shaping our cognition are failing at even the most basic safety expectations.

The AI Safety Index (Winter 2025) reported:

“No major AI developer demonstrated adequate preparedness for catastrophic risks. Most scored poorly on transparency, accountability, and external evaluability.”

Source: https://futureoflife.org/ai-safety-index-winter-2025/

A companion academic review by Oxford, Cambridge, and Georgetown concluded:

“Safety commitments across leading LLM developers are inconsistent, largely self-regulated, and often unverifiable.”

Source: https://arxiv.org/pdf/2508.16982

We are weakening human cognition while trusting companies that cannot prove they are safe. There is no version of this trajectory that ends well without deliberate intervention.

Resources:

The Hidden Wisdom of Knowing in the AI Era:

A Critical Survey of LLM Development Initiatives: https://arxiv.org/pdf/2508.16982

Future of Life AI Safety Index (Winter 2025): https://futureoflife.org/ai-safety-index-winter-2025/

Supporting Safety Documentation (PDF): https://cdn.sanity.io/files/wc2kmxvk/revamp/79776912203edccc44f84d26abed846b9b23cb06.pdf

BOTTOM LINE: Tools that reduce effort but not capability are not accelerators—they are cognitive liabilities. Product leaders must design for mental strength, not dependency.

Schools are producing prompt operators, not original thinkers.

Education systems are bolting AI onto decades-old learning models without rethinking what learning is. Instead of cultivating reasoning, imagination, and embodied intelligence, schools are teaching children to rely on AI systems they cannot critique.

Resources:

UNESCO: AI & the Future of Education: https://www.unesco.org/en/articles/ai-and-future-education-disruptions-dilemmas-and-directions

Beyond Fairness in Computer Vision: https://cdn.sanity.io/files/wc2kmxvk/revamp/79776912203edccc44f84d26abed846b9b23cb06.pdf

AI Skills for Students: https://trswarriors.com/ai-education-preparing-students-future/

BOTTOM LINE: If we do not redesign education, we will create a generation of humans who can operate AI but cannot outthink, challenge, or transcend it.

Featured AI Thinker: Luiza Jarovsky

Luiza Jarovsky is one of the most essential voices in AI governance today. At a time when global AI companies are actively pushing to loosen regulation—or bypass it entirely—Luiza’s work provides a critical counterbalance rooted in human rights, safety, law, and long-term societal impact.

Why her work matters now:

She exposes the structural risks of deregulated AI adoption across governments and corporations.

She documents how weak or performative governance puts vulnerable communities at disproportionate risk.

She offers practical frameworks for ethical, enforceable AI oversight.

Follow her work:

BOTTOM LINE: If you build or deploy AI and you are not following Luiza’s work, you are missing the governance lens that will define which companies survive the coming regulatory wave.

Recommended Reality Checks

Two critical signals from the field this week:

Ethan Mollick on the accelerating automation of creative workflows

https://x.com/emollick/status/1996418841426227516

AI is quietly outperforming human creative processes in categories many believed were “safe.” The speed of improvement is outpacing organizational awareness.Jeffrey Lee Funk on markets losing patience with empty AI narratives

https://x.com/jeffreyleefunk/status/1996612615850676703

Investors are separating real AI value from hype. Companies promising transformation without measurable impact are being punished.

BOTTOM LINE: The creative and product landscape is shifting beneath our feet. Those who don’t adapt—intellectually, strategically, and operationally—will lose relevance.

Final Reflection — Legacy Is a Product Decision

Everything in this newsletter points to a single, unavoidable truth:

AI does not define our future. The product decisions we make do.

We can build tools that:

expand human originality,

strengthen cognitive resilience,

elevate creative careers,

and produce a generation capable of thinking beyond the machine.

Or we can build tools that:

replace the creative class,

hollow out human judgment,

weaken educational outcomes,

and leave society dependent on systems controlled by a handful of companies.

As product leaders—designers, strategists, researchers, technologists—we decide which future gets built.

Legacy isn’t abstract. It’s the cumulative effect of every interface we design, every shortcut we greenlight, every metric we reward, and every model we deploy.

If you want to build AI that strengthens humanity instead of diminishing it, reach out. Let’s design for human outcomes, not machine efficiency.

arpy@ph1.ca