Healthcare is constantly highlighted as the industry that will benefit the most from AI. The prospective opportunities are endless: Improve access to services, improve quality of service, patient outcomes, and medical research. An analysis predicts that the healthcare could save up to $360B a year by implementing AI.

That’s we invited an expert to discuss what other industries can learn from healthcare’s massive AI opportunity. Spencer Dorn, the Vice Chair and Professor of Medicine at the University of North Carolina. He is a contributor to Forbes and one of LinkedIn’s Top Voices speaking on Healthcare + Innovation.

Listen on Spotify | Listen on Apple Podcasts

Key takeaways from the episode:

AI has been impacting healthcare for years, especially to create Electronic Health Records (EHS) as a way of centralizing information

AI is being explored today as assistants to medical professionals (e.g. Virtual/digital scribes) and across a variety of diagnosis scenarios (video)

But the rollouts have been plagued by consistent issues related to adoption and poor comprehension of the actual problems

To get EHS implemented EHS it needed an Obama-era law and incentive plan

Many of the initiatives aiming to speed up access to healthcare and diagnosis are undermining the relationships across the journey of being a patient

Technology is rarely the solution because the problem is typically bureaucracy, culture, lack of incentives, and externalities

Lessons for you:

Beware complexity: Most of AI products being sold by major corps and consultancies are ones solving micro-problems and not designed to tackle complex problems

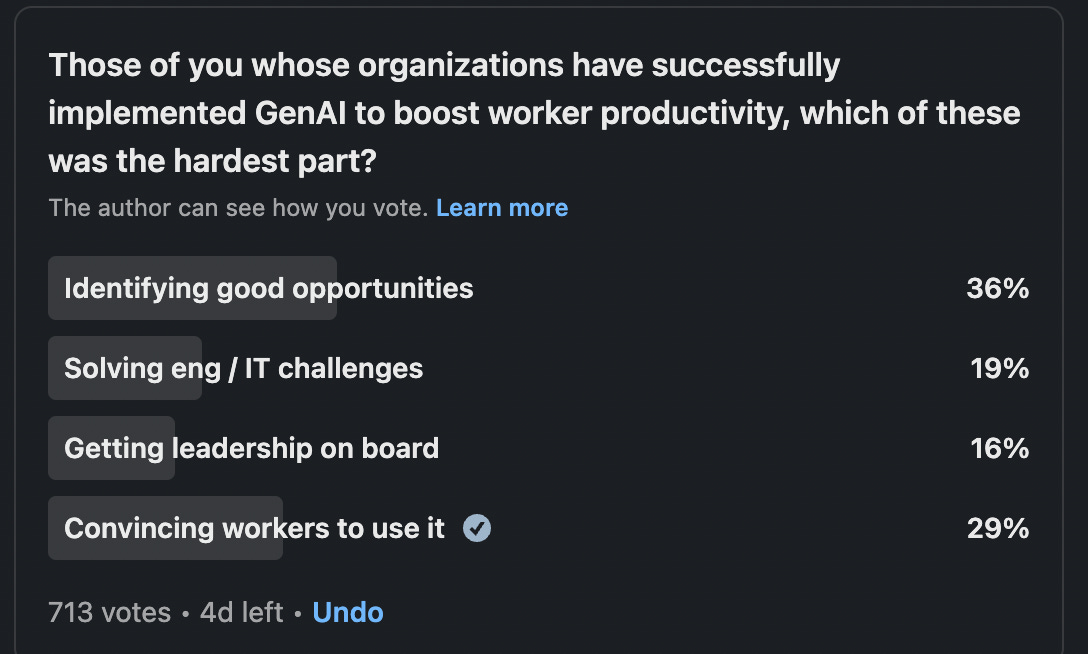

Worry about adoption: It doesn’t matter how brilliant your solution is, getting buy-in and adoption within enterprises will be the most pressing challenge

Think of problems as systems: JTBD and user stories have a tendency of over-simplifying problems and underrepresenting the range of factors, dependancies, and implications of a problem on the system as a whole

Ethnography is key: If you want to make a positive change to a problem space you need to leverage deep qualitative research techniques, like ethnography, to document and assess what matters and why

Monitor for unintended consequences: Even after dedicating lots of time to research and planning, we must be monitoring for unintended consequences that may create more work or more anxiety for those stakeholders within the system.

Challenges building truly human-centred AI products and solutions

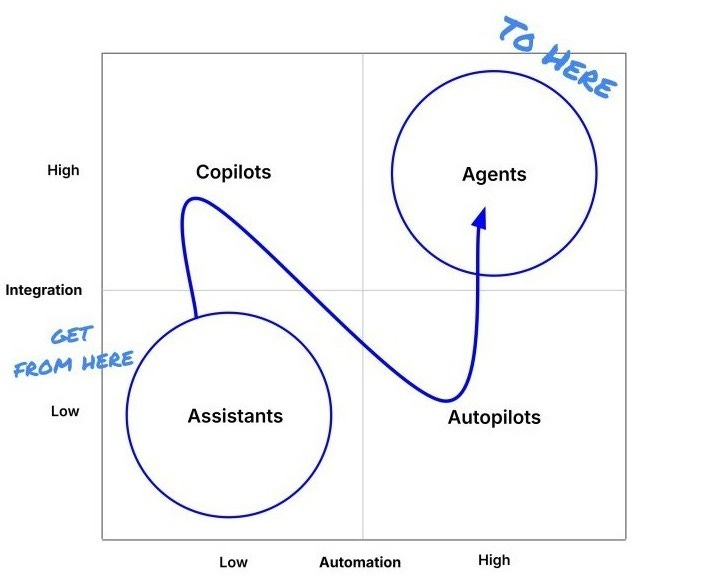

AI thought leaders love to push this message of getting to the future quickly. It creates this narrative that we’re all falling behind.

But let’s slow down and recognize that there are countless of questions to be addressed before throwing everything out in favour or the shiny new system. This paper from Microsoft explored the many questions that users are posing about using AI agents. And these are very important questions that every team should be able to answer clearly to their users before deploying any solution.

This poll from Google’s former Chief Decision Scientist highlights that the technical part of implementing AI is no longer the biggest barrier, understanding humans is. If the organizations polled —ones who have successfully implemented AI— are struggling to identify good opportunities and to convince people to use it, then imagine what struggles an everyday org will have.

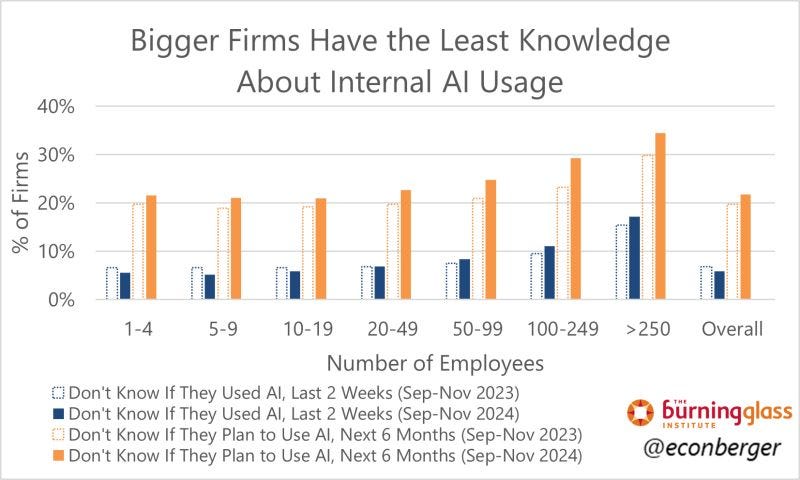

And also worth considering that AI adoption is still much lower than we’d expect given all the hype. The implementation of aI —especially across large orgs— may takes a decade or more because we’re fundamentally asking teams to change the way they work. Moreso, those in regulated industries need the permission to change how they operate before they can even consider implementing AI products.

In the background many workers are using AI without their employers’ knowledge, leading to an endless range of potential risks.

Mindset shifts to help implement AI

In the podcast Spencer kept highlighting that we need to go into problem spaces with humility and without the expectation that problems are easy to solve.

Other guests have suggests other types of mindset shifts:

Jess Holbrook stated we need to be specific when talk about AI: Too many projects are built off of expectations, not specifications of what AI should do and how

Kristie J. Fisher believes we need to measure time well spent using AI: The best solution to adoption problems is making sure that the AI product delivers value AND time well spent

Josh Clark advocated for embracing the weirdness of AI: The imperfectness of AI outputs should be viewed as a creative and innovative feature to help you explore new directions

Phillip Maggs challenges us to imagine new possibilities with AI: This is your time to spread your capabilities into areas you always wished were possible

Alexandra Holness expects that designers need to be less emotional precious with AI: This is a time of uncertainty and what worked before may not work in the future, so especially designers will need to go into problem spaces with additional humbleness

Metalab is probably the premier design shop in North America. They’ve designed many of the most popular AI products in market today. Sara Vienna, their VP Design published a great manifesto about mindset shifts that’s worth a read. And she’ll be a guest on the podcast soon!

And for those wanting more of a blueprint: Tertiary Education Quality and Standards Agency of Australia put together a guide that has lots of helpful detail into some of these.